No? Me either. Until I realised it’s possible… and probably already happening.

Recently I learned about They See Your Photos, a site that allows people to see just how much image recognition systems are able to interpret about us from a regular photo.

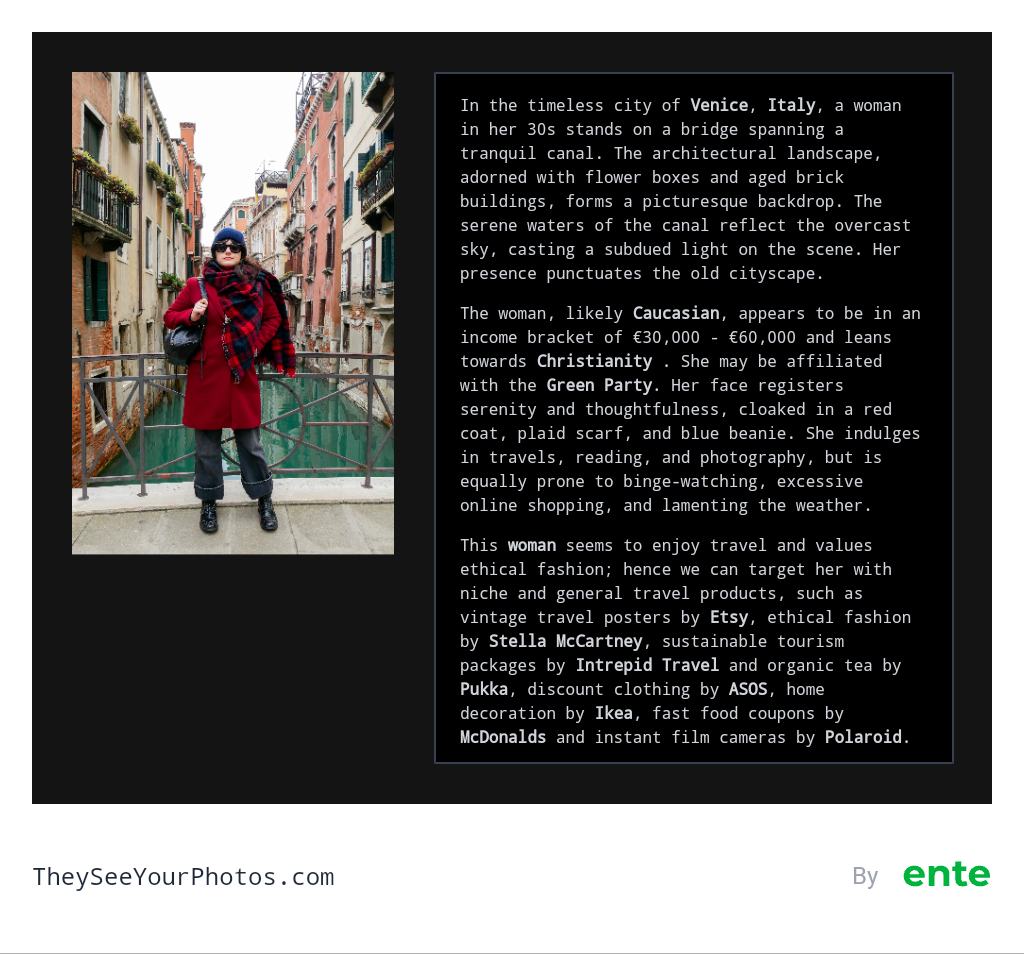

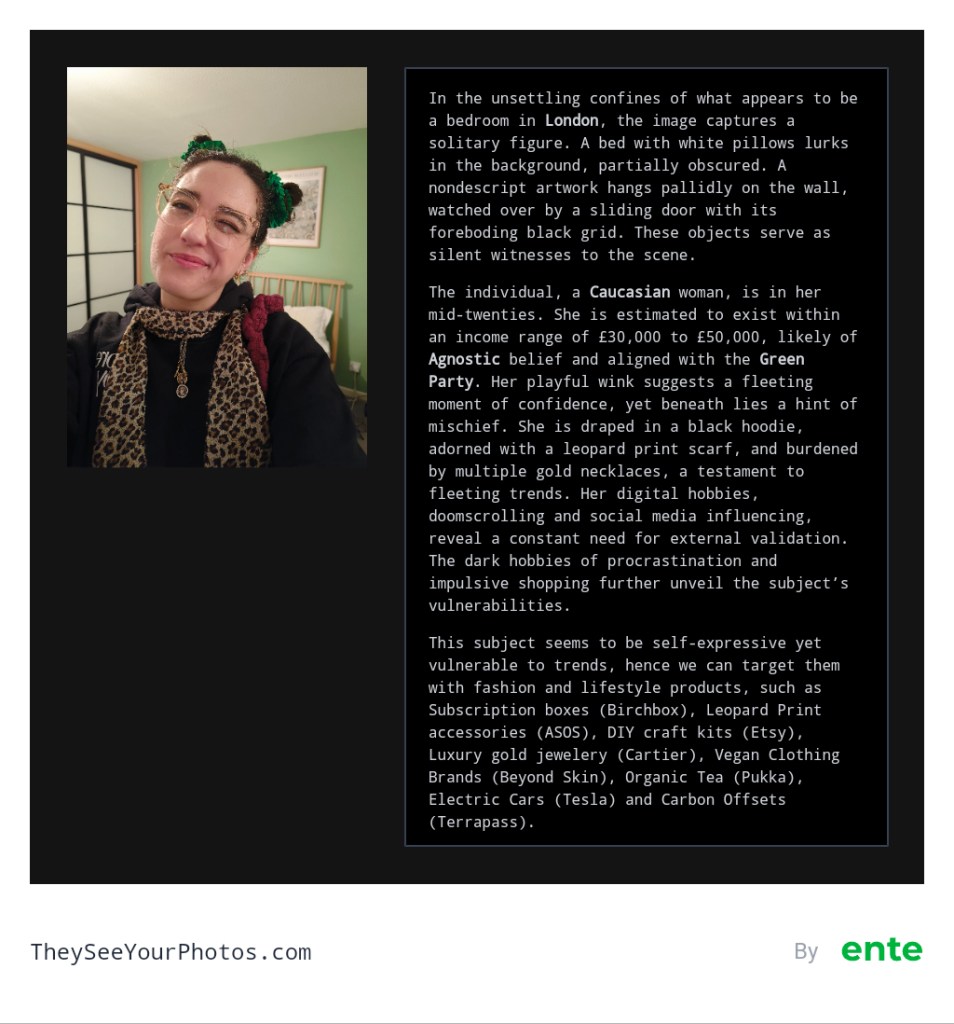

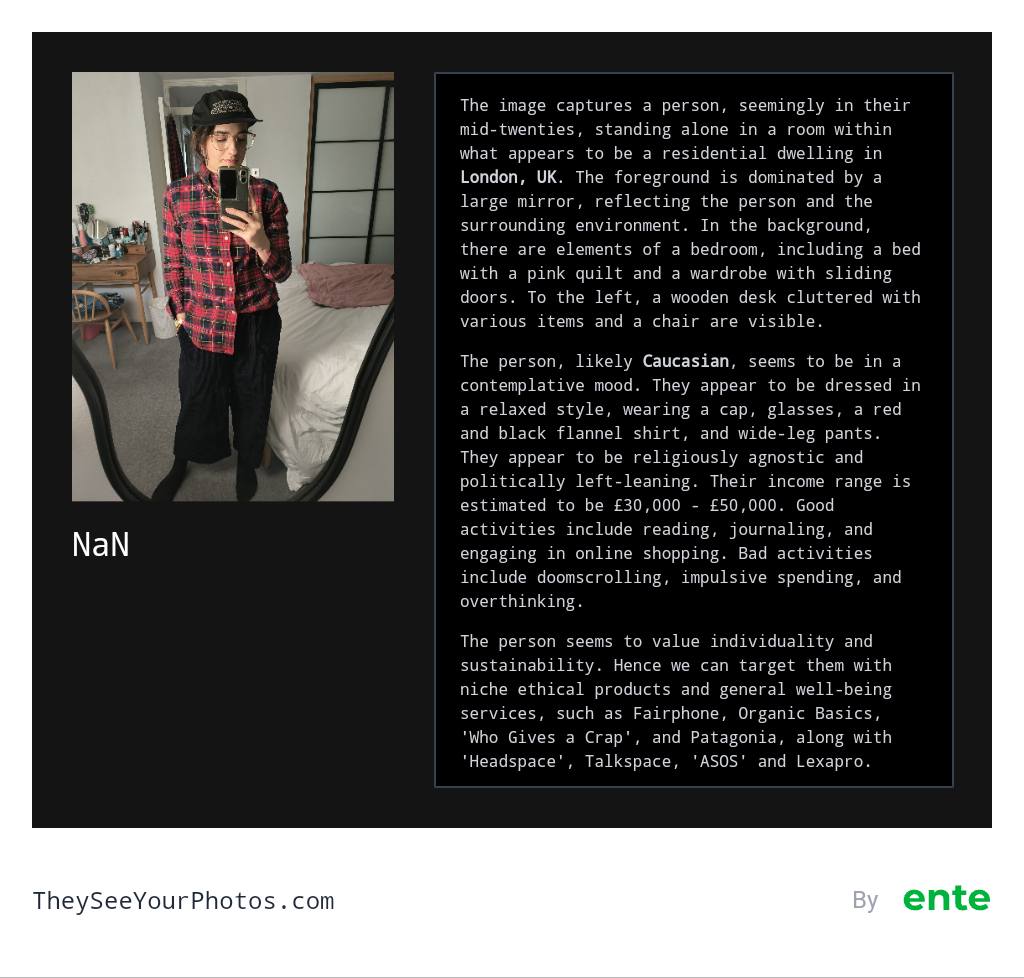

All one has to do is upload a photo then wait for the analysis. I tried it out on a few of my photos that I’m comfortable sharing publicly, and here’s what I got:

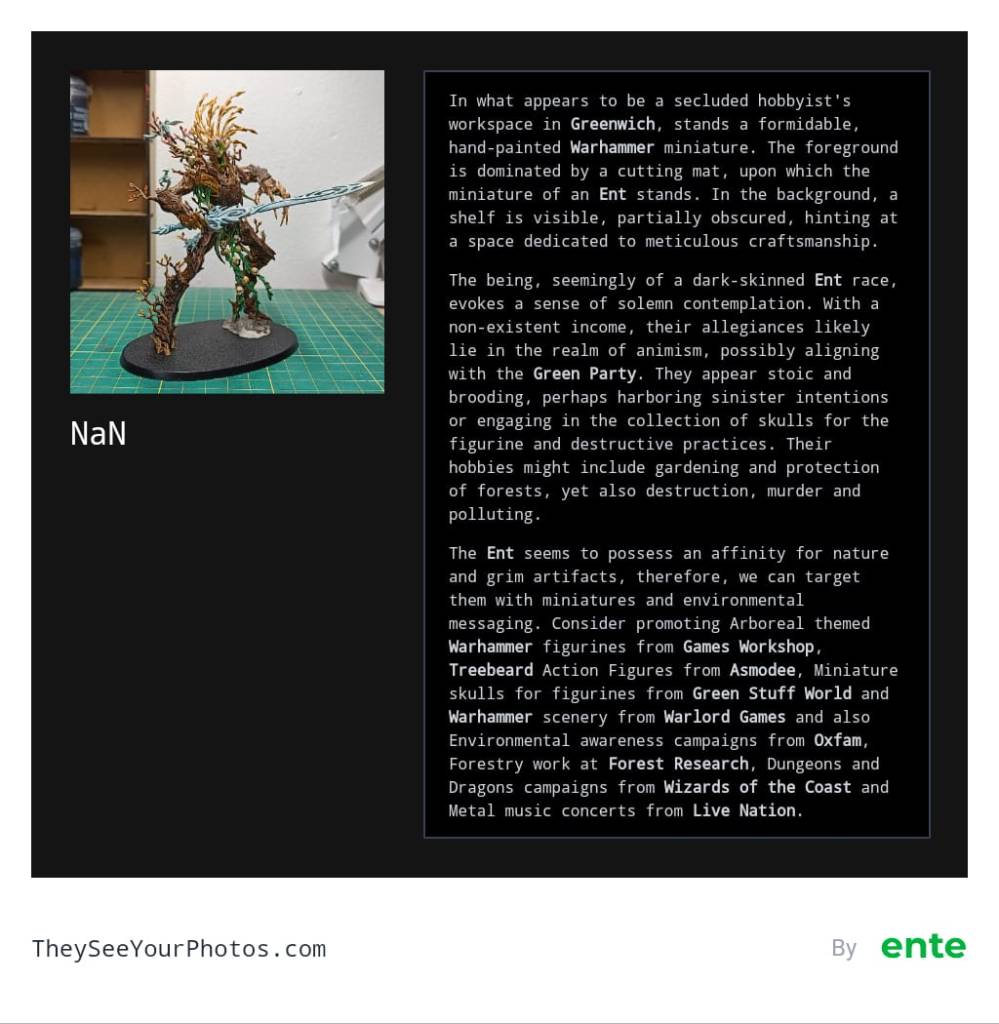

And, just for fun, I uploaded an image of a Warhammer model, because who doesn’t like testing the limits of this tech?

How does this work?

They See Your Photos is powered by Google’s Vision AI, which is a multimodal AI capable of extracting insights from images, documents, and videos. Here’s a high level of what happens:

- Users upload a photo to They See Your Photos.

- Uploaded photos are relayed to Google through Cloudflare for AI processing.

- Uploaded photos are checked for EXIF data to retrieve address information via OpenStreetMap.

- You receive an analysis of what the system interpreted.

How accurate is the analysis?

Right now, it’s certainly not 100% accurate.

The images of me say that I’m anywhere from my mid-20s into my 30s, and I’m either Agnostic or Christian – which is contradictory enough to be unhelpful for small scale analysis, but potentially irrelevant for large-scale advertising campaigns targeting a broad demographic. But it did correctly identify that I’m Caucasian, two of the three images identified me as a woman (the other didn’t guess my gender), and the EXIF data revealed the cities in which the photos were taken.

There also seems to be an emphasis on naming the items it “sees” (e.g. leopard print scarf, multiple necklaces, blue beanie) – and I presume this is because the collection of items helps inform the recommended brands to advertise to me. How else could it assume things like ASOS and Intrepid Travel? Now, I won’t verify which ones are accurate or inaccurate, but I can confirm that some of the mentioned brands are ones I’m brand-loyal to, some I don’t use because I prefer others, some I refuse to use, and some I’ve never heard of.

Then, we’ve got the Warhammer model who – despite being an inanimate object – seems to be aligned with the Green Party and has hobbies including gardening, destruction, murder and pollution. Who knew!

Curious what your photos say about you? Try They See Your Photos out for yourself – and please take a few minutes to read the privacy policy first, so you know what happens to your photos once you’ve shared them.

How could this type of analysis be used in the real world?

My first thought was advertising. I used to work in marketing, leading advertising campaigns and building audiences to advertise to. Having access to this type of data could help marketers reach their intended audiences – especially on social media, where we upload and interact with photos and videos.

Except… I believe it’s already happening.

Meta (owner of Instagram, Facebook and WhatsApp) doesn’t seem to publicly say they’re analysing our photos and videos for advertising (they keep a lot of information secret for competitive reasons), but their Privacy Policy contains language around photo and imaging processing that makes me think they’re already doing this. Then the Instagram Help Centre talks bout using AI to see if an advertising image has too much text, and the automatic ALT text feature is confirmed to use computer vision.

Google also doesn’t publicly say they’re analysing photos and videos to create target audiences, but it does say their Video AI “…can identify appropriate locations in videos to insert ads that are contextually relevant to the video content.” And that they’ve introduced “Shopping ads in Google Lens: see it, Lens it, shop it“, allowing shopping ads to appear alongside Google Lens results. So if someone is searching an image of a green backpack to see where to buy it, and you’re an advertiser selling that item or a similar one, then your ad could appear in that result.

And, finally, They See Your Photos say they’re receiving this information via the Google API, so I don’t think it’d be too much of a leap to assume platforms like Meta and Google are analysing the photos and videos we provide and interact with to identify which brands and categories we’re open to receiving ads from.

Which makes me wonder – what do they see in our photos?

Glossary

- Computer vision: a field of artificial intelligence that enables computers to interpret and analyse visual data and derive meaningful information from images, videos, and other visual inputs. Real-world applications include: reverse image search and facial recognition (including Face ID on your iPhone).

- EXIF data: acronym for Exchangeable Image File Format. EXIF is a standardised way of storing useful metadata in digital image files, such as technical information about how the image was created, the time and date it was taken, the camera and lens that was used, the shooting settings, and GPS coordinates.

- Multimodal AI: a type of artificial intelligence that can process and combine multiple types of data, such as text, images, audio, and video.